Now for a technical article.

How can several parallel programs maintain a consistent view of state. By this I mean how can two programs, possibly in different countries, manipulate common state variables in a consistent manner? How can they do so in a way that does not involve any locking?

How do transaction memories work?

To start with I must explain why concurrent updates to data represents a problem.

The conventional way to solve this problem is to lock the server while the individual transactions are taking place, but this approach is thwart with problems as I have pointed out in an earlier article.

To allow these updates to proceed in parallel and without recourse to locking, we can make use of a so called transaction memory.

Transaction Memory

A transaction memory is a set of tuples (Var,Version,Value). In the diagram X has version 1 and value 20. Y has version 6 and value true.

The version number represents the number of times the variable has changed.

Now let's try and do a transaction. Suppose we want to change X and Y. First we send a {get,X,Y} message to the server. It responds with the values of the variables and their version numbers.

Having changed the variables we send a {put,(X,1,30),(Y,6,true)} message back to the server. The server will accept this message only if the version numbers of all the variables agree. If this is the case then the server accepts the changes and replies yes. If any of the version numbers disagree, then it replies no.

Clearly if a second process manages to update the memory before the first process has replied then the version numbers will not agree and the update will fail.

Note that this algorithm does not lock the data and works well in a distributed environment where the clients and servers are on physically different machines which unknown propagation delays.

Isn't this just the good old test-and-set operation generalised over sets?

Yes - of course it is. If you think back to how mutexes were implemented they made use of semaphores. Semaphores were implemented by an atomic test-and-set instruction. A semaphore can only have the value zero or one. The test-and-set operation said if the value of this variable is zero then change it to one this operation was performed atomically. To reserve a critical region it was protected by a flag. If the flag was zero then it could be reserved, if the flag was one then it was being used. To avoid two processes simultaneously reserving the region test-and-set must be atomic. Transaction memories merely generalise this method.

Now let's implement this in Erlang:

The answer is surprisingly simple and incredibly beautiful and makes use of something called a transaction memory.

How do transaction memories work?

To start with I must explain why concurrent updates to data represents a problem.

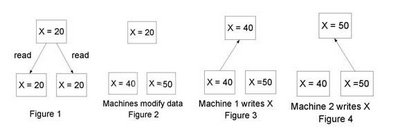

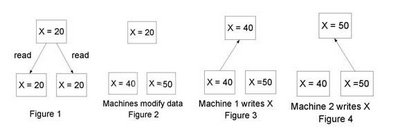

Imagine a server S with some state variable X and two clients C1 and C2. The clients fetch the data from the server (figure 1). Now both clients think that X=20. C1 increases X by 20 and C2 increases X by 30. They modify their local copies, (figures 2) and write the data back to the server (figures 2 and 3). If these updates had been performed one after the other then the final value of X stored on the server would have been 70 and not 50 obviously something has gone wrong.

The conventional way to solve this problem is to lock the server while the individual transactions are taking place, but this approach is thwart with problems as I have pointed out in an earlier article.

To allow these updates to proceed in parallel and without recourse to locking, we can make use of a so called transaction memory.

Transaction Memory

A transaction memory is a set of tuples (Var,Version,Value). In the diagram X has version 1 and value 20. Y has version 6 and value true.

The version number represents the number of times the variable has changed.

Now let's try and do a transaction. Suppose we want to change X and Y. First we send a {get,X,Y} message to the server. It responds with the values of the variables and their version numbers.

Having changed the variables we send a {put,(X,1,30),(Y,6,true)} message back to the server. The server will accept this message only if the version numbers of all the variables agree. If this is the case then the server accepts the changes and replies yes. If any of the version numbers disagree, then it replies no.

Clearly if a second process manages to update the memory before the first process has replied then the version numbers will not agree and the update will fail.

Note that this algorithm does not lock the data and works well in a distributed environment where the clients and servers are on physically different machines which unknown propagation delays.

Isn't this just the good old test-and-set operation generalised over sets?

Yes - of course it is. If you think back to how mutexes were implemented they made use of semaphores. Semaphores were implemented by an atomic test-and-set instruction. A semaphore can only have the value zero or one. The test-and-set operation said if the value of this variable is zero then change it to one this operation was performed atomically. To reserve a critical region it was protected by a flag. If the flag was zero then it could be reserved, if the flag was one then it was being used. To avoid two processes simultaneously reserving the region test-and-set must be atomic. Transaction memories merely generalise this method.

Now let's implement this in Erlang:

-module(tm).

-export([new/0, addVar/2, getVars/2, putVars/2]).

%% new() -> Pid

%% create a new transaction memory (TM)

%%

%% addVar(Pid, Var) -> ok

%% add a variable to the TM store

%%

%% getVars([V1,...]) -> [{Vsn,Data},....]

%% lookup variables v1,V2, ... in the TM

%%

%% putVars([{Var,Vsn,Data}]) -> Bool

%% update variables in TM

%% Heres a sample run

%%

%% 1> c(tm).

%% {ok,tm}

%% 2> P=tm:new().

%% <0.47.0>

%% 3> tm:addVar(x).

%% ok

%% 4> tm:addVar(P,x).

%% ok

%% 5> tm:addVar(P,y).

%% ok

%% 6> tm:getVars(P, [x,y]).

%% [{ok,{0,void}},{ok,{0,void}}]

%% 7> tm:putVars(P, [{x,0,12},{y,0,true}]).

%% yes

%% 8> tm:putVars(P, [{x,1,25}]).

%% yes

%% 9> tm:getVars(P, [x,y]).

%% [{ok,{2,25}},{ok,{1,true}}]

%% 10> tm:putVars(P, [{x,1,15}]).

%% no

new() -> spawn(fun() -> loop(dict:new()) end).

addVar(Pid, Var) -> rpc(Pid, {create, Var}).

getVars(Pid, Vgs) -> rpc(Pid, {get, Vgs}).

putVars(Pid, New) -> rpc(Pid, {put, New}).

%% internal

%%

%% remote procedure call

rpc(Pid, Q) ->

Pid ! {self(), Q},

receive

{Pid, Reply} -> Reply

end.

loop(Dict) ->

receive

{From, {get, Vars}} ->

Vgs = lists:map(fun(I) ->

dict:find(I, Dict) end, Vars),

From ! {self(), Vgs},

loop(Dict);

{From, {put, Vgs}} ->

case update(Vgs, Dict) of

no ->

From ! {self(), no},

loop(Dict);

{yes, Dict1} ->

From ! {self(), yes},

loop(Dict1)

end;

{From, {create, Var}} ->

From ! {self(), ok},

loop(create_var(Var, Dict))

end.

update([{Var,Generation,Val}|T], D) ->

{G, _} = dict:fetch(Var, D),

case Generation of

G -> update(T, dict:store(Var, {G+1, Val}, D));

_ -> no

end;

update([], D) ->

{yes, D}.

create_var(Var, Dict) ->

case dict:find(Var, Dict) of

{ok, _} -> Dict;

error -> dict:store(Var, {0,void}, Dict)

end.